Most organizations discover the same painful truth when scaling their AI initiatives. The model is not the problem. The real bottleneck is running AI in production at a speed, cost and reliability that the business can sustain. If your AI features feel slow, inconsistent or unexpectedly expensive, the issue is rarely the model. It is almost always the inference platform behind it.

Most organizations discover the same painful truth when scaling their AI initiatives. The model is not the problem. The real bottleneck is running AI in production at a speed, cost and reliability that the business can sustain. If your AI features feel slow, inconsistent or unexpectedly expensive, the issue is rarely the model. It is almost always the inference platform behind it.

This is why companies are now treating AI inference platforms as a core business decision. The platform you choose will influence customer experience, operational cost, compliance posture and your ability to expand AI across the organization. Select the wrong one and costs can escalate quickly. Choose the right one and AI becomes a stable, scalable part of your workflow.

In this article, Titan Technology breaks down what AI inference platforms really mean for business leaders, the types of providers available today and how to evaluate them through a strategy-first lens. To learn more about how our team supports enterprises in developing AI and cloud-driven solutions, please visit our services page.

What AI Inference Platforms Mean for Business Outcomes

Many organizations start their AI journey by selecting a model, but the true business results emerge only when that model is deployed at scale. AI initiatives often perform well in controlled pilots but struggle once they reach real customer interactions, fluctuating traffic and tight service-level expectations. This is where the choice of AI inference platform becomes critical.

An AI inference platform influences four essential business outcomes that determine whether AI becomes a sustainable capability or remains a costly experiment.

1. Cost Control and Predictable Spending

Inference is where operational AI costs accumulate because every interaction with a user or system triggers computation. The right platform helps organizations manage these costs through transparent pricing, resource optimization and stable performance under varying traffic. Without this alignment, businesses often face unpredictable expenses that escalate as AI usage grows.

2. Customer Experience and Service Quality

AI-driven applications rely on fast and consistent responses. Slow inference affects customer satisfaction, employee productivity and adoption rates. A reliable inference platform ensures that performance remains stable even during peak usage, supporting a seamless experience that strengthens trust and engagement.

3. Operational Scalability and Business Agility

As AI expands across departments and workloads, organizations need infrastructure that can scale without becoming a barrier. A suitable inference platform supports rapid feature rollout, multi-team adoption and the ability to test new use cases without redesigning existing systems. This strengthens innovation capacity and accelerates business impact.

4. Governance, Security and Compliance

Enterprises must operate within strict data, security and regulatory requirements. An effective inference platform supports proper governance, access control and audit capabilities. These elements protect the organization as AI moves into workflows that handle sensitive information and mission-critical operations.

Across the businesses we support in AI and cloud modernization, these four dimensions consistently determine whether AI delivers measurable value or stalls before reaching its full potential. They provide a clear foundation for evaluating any AI inference provider and choosing a platform that aligns with long-term business strategy.

The Market Landscape: Three Types of AI Inference Platforms Businesses Commonly Consider

As enterprises expand their AI initiatives, they quickly discover that not all AI inference platforms are built for the same needs. Some are designed for highly regulated environments, others prioritize rapid experimentation and ease of use, while a third group focuses on flexibility and cost efficiency. Understanding these categories helps leaders narrow their options before evaluating specific providers.

The market today generally falls into three groups: cloud providers, foundation model labs and specialist open-source platforms. Each group offers a distinct value proposition and aligns with different business priorities.

1. Cloud Providers

(Azure, AWS, Google Cloud)

Cloud providers remain the most established option for enterprises that prioritize security, compliance and operational stability. They offer managed AI services with strong governance features, global availability and integration across existing infrastructure. Cloud-native inference platforms often appeal to organizations that already rely on a specific cloud ecosystem or operate in regulated industries such as finance, healthcare or public services.

From a business perspective, cloud providers deliver predictable governance and enterprise-grade reliability. The trade-off is that setup and configuration may require more architectural planning, especially for teams without deep cloud expertise.

2. Foundation Model Labs

(OpenAI, Anthropic, Perplexity and others)

Foundation model labs provide direct access to their flagship models through simple, developer-friendly APIs. These platforms prioritize ease of use and rapid time-to-value, making them ideal for organizations launching AI pilots, MVPs or early customer-facing features. Teams can experiment quickly, build prototypes and gather feedback without heavy infrastructure work.

The primary consideration for business leaders is scalability. Costs can rise as usage grows, and long-term control of infrastructure is limited. These platforms excel in the early stages of AI adoption but require financial and architectural planning once the business pushes toward larger-scale deployment.

3. Specialist Open-Source Providers

(Hugging Face, Replicate and similar platforms)

Specialist providers focus on open-weight models and flexible deployment options. They offer greater customization and fine-tuning capabilities, allowing businesses to tailor AI systems to specific domains or operational needs. These platforms appeal to organizations that want deeper control, prefer open-source ecosystems or have strong internal technical teams capable of optimizing workloads.

The opportunity lies in flexibility and potential cost savings. The challenge is that these platforms may provide fewer enterprise compliance features and often require more internal engineering capacity to manage effectively.

Across these three categories, each group serves a different business scenario. Cloud providers deliver stability and governance. Foundation labs offer speed and simplicity. Specialist platforms provide customization and flexibility. Understanding these distinctions helps clarify which direction aligns with your operational needs and long-term AI strategy.

In the next section, we examine the business-critical factors that help narrow your choices and reduce the risk of selecting an AI inference platform that does not scale with your organization.

The Non-Negotiables: Business Filters That Prevent Costly Mistakes

Before comparing individual providers, enterprises must establish a set of “non-negotiables.” These are the foundational requirements that determine whether an AI inference platform can support long-term business goals. Organizations that skip this step often run into unexpected expenses, compliance risks or performance issues once AI workloads scale.

Below are the four business filters that help companies narrow the field quickly and confidently.

1. Model Availability and Long-Term Viability

The first question any business must answer is whether a platform supports the models required today and the models that may become important in the next 12 to 24 months. Some platforms offer exclusive access to proprietary models, while others specialize in open-weight or fine-tuned options.

From a strategic perspective, leaders should consider the long-term benefits of flexibility. Selecting a platform that restricts future model choices can create operational risk, increase switching costs and limit innovation. Choosing an inference platform is not only about capabilities today. It is about ensuring the business will not be constrained tomorrow.

2. Total Cost of Ownership and Predictable Economics

Inference is where AI costs accumulate as usage increases. Pricing models vary widely across providers. Token-based pricing is effective for experimentation but can become challenging to manage at scale. Compute-based pricing can provide transparency for mature workloads, but it requires internal expertise to optimize it effectively.

Business leaders need clarity on how costs grow over time. A platform should offer predictable economics, visibility into consumption patterns and the ability to optimize workloads without sacrificing performance. Without these capabilities, organizations risk overspending as traffic increases.

3. Governance, Security and Compliance Requirements

Enterprises operate under strict data and regulatory obligations. Any AI inference platform must support appropriate access controls, data isolation, encryption and audit capabilities. These requirements become non-negotiable when AI is applied to customer information, financial data or sensitive internal documents.

Selecting a platform without the right compliance posture can delay deployments, create legal exposure and undermine trust across the organization. Governance and security must be treated as strategic criteria, not optional features.

4. Reliability and Business Continuity

AI applications must work consistently to deliver value. If inference performance drops or availability fluctuates, customer experience and operational workflows are immediately affected. A suitable platform provides the stability needed to support real-time interactions, peak demand and multi-region usage.

Downtime or performance degradation carries a direct business cost. This makes reliability and continuity essential filters before any further evaluation.

Across the enterprises we support in AI modernization, these non-negotiables consistently determine which inference platforms remain viable as AI usage expands. They help reduce the risk of choosing a platform that performs well in pilots but fails under real operating conditions.

The Nice-to-Haves That Improve Efficiency and Accelerate AI Adoption

Once the foundational requirements are met, organizations can evaluate additional qualities that make an AI inference platform not only viable but highly effective. These features are not mandatory, yet they significantly enhance productivity, reduce operational friction and increase the organization’s ability to scale AI initiatives with confidence.

Below are the three characteristics that often separate a good platform from one that supports long-term innovation.

1. Developer Productivity and Faster Time-to-Market

A platform that is easy to use provides immediate benefits for teams working under tight timelines. When developers can experiment quickly, test ideas and deploy updates without unnecessary complexity, the organization moves faster. This is especially important for companies building AI-driven customer experiences or internal copilots where rapid iteration determines competitive advantage.

Clear documentation, intuitive interfaces and strong developer tooling help reduce onboarding time and shorten the cycle between concept and production release. While these elements may seem minor at first, they directly contribute to the speed at which the business can deliver value.

2. Cost Transparency and Budget Predictability

Even if a platform meets core budget requirements, organizations benefit from tools that provide granular insights into usage and cost trends. Transparent dashboards, real-time monitoring and predictable pricing models allow finance and engineering teams to collaborate more effectively. They also enable leaders to forecast costs with greater accuracy and avoid surprises as adoption increases.

Cost transparency becomes especially valuable during scaling phases, when usage grows beyond initial projections. A platform that makes cost drivers clear helps businesses optimize workloads before expenses escalate.

3. Flexibility to Experiment and Support New Use Cases

AI adoption does not follow a linear path. Most organizations begin with one or two use cases but quickly identify additional opportunities once initial value is proven. A flexible inference platform supports this growth by making it easy to test new models, integrate new capabilities or adapt to changes in business strategy.

The ability to experiment without rearchitecting core systems reduces risk and empowers teams to explore new AI-driven ideas. This flexibility ensures that early AI investments continue to generate returns as the organization evolves.

These nice-to-have qualities are increasingly important for organizations seeking to scale AI beyond isolated pilots. They improve efficiency, support innovation and create an environment where teams can grow AI capabilities with confidence.

Which AI Inference Platform Fits Your Business?

After reviewing the core requirements and the additional factors that enhance adoption, the next step is understanding which category of AI inference platforms aligns best with your organization’s goals. Each option serves a different set of priorities, and the best choice depends on business context rather than technical preference.

The three categories introduced earlier—cloud providers, foundation model labs and specialist platforms—offer distinct advantages. Below is a practical way to determine when each one fits.

1. When Cloud Providers Are the Best Fit

Cloud providers such as Azure, AWS, and Google Cloud are well-suited for organizations that prioritize stability, governance, and long-term scalability. They offer mature infrastructure, robust compliance features and strong integration with enterprise systems. This makes them a natural fit for companies that already operate within a major cloud ecosystem or require strict data management controls.

Cloud platforms are often the most suitable choice when an organization plans to adopt AI extensively across multiple departments. They support predictable growth and provide the operational backbone required for mission-critical applications.

2. When Foundation Model Labs Make the Most Sense

Platforms operated by model developers such as OpenAI, Anthropic or Perplexity are ideal for teams that need to move fast. They offer simple APIs, clear pricing and rapid setup, which shortens the time between idea and initial deployment. These strengths make them well-suited for prototypes, early pilots, or customer-facing features where the priority is speed rather than deep infrastructure control.

However, leaders should monitor cost trends and prepare for architectural adjustments as usage expands. These platforms are excellent for early innovation but may require additional planning once demand grows.

3. When Specialist Open-Source Providers Are the Best Match

Providers like Hugging Face and Replicate appeal to organizations that want customization and greater control over their AI workflows. They support open-weight models and fine-tuning, which provides data and engineering teams with the flexibility to build domain-specific AI systems. They can also be cost-effective when optimized properly.

These platforms are most suitable for organizations with strong technical capabilities and clear requirements for model control. They provide freedom to experiment but require internal capacity to manage and maintain more complex AI operations.

Across the enterprises we work with, no single platform serves every scenario. The right choice depends on factors such as internal capability, regulatory obligations, expected traffic and the organization’s long-term AI strategy. Once these elements are clear, selecting an inference platform becomes a strategic decision rather than a technical gamble.

When to Consider Hardware Innovators for AI Inference

Beyond mainstream cloud platforms and model provider APIs, a smaller group of emerging vendors is focused on specialized hardware designed specifically for high-performance AI inference. These innovators are not the default choice for most organizations, yet they can offer meaningful advantages in situations where speed, efficiency or scale becomes a competitive differentiator.

While their solutions are more targeted, understanding their role helps enterprises anticipate future infrastructure needs and evaluate strategic opportunities.

1. Ultra Low Latency for Real-Time Applications

Some industries rely on real-time decision-making where milliseconds matter. Examples include trading systems, conversational agents, autonomous operations or real-time analytics. Hardware innovators in this space build systems optimized to deliver extremely fast inference speeds.

For organizations where delays impact revenue, safety or customer experience, these platforms can offer an advantage that traditional cloud infrastructure may not provide.

2. High Volume Workloads That Demand Cost Efficiency

Companies generating millions of daily requests may benefit from specialized hardware that reduces the cost per request. These solutions are often designed to optimize specific model architectures, which can lower energy usage and improve throughput.

Businesses that expect sustained high traffic or operate in industries where margins are tight may consider this category as part of a cost efficiency strategy.

3. Support for Exceptionally Large or Custom Models

Some organizations operate proprietary or very large models that require infrastructure beyond standard cloud offerings. Hardware innovators often support configurations designed for these demanding workloads, enabling teams to run models that would otherwise be impractical or too expensive.

For enterprises that build advanced internal capabilities or apply AI to complex scientific or engineering problems, this flexibility can be a significant advantage.

4. Not for Most Organizations, but Valuable for the Right Ones

While these platforms offer unique strengths, they typically require a deeper investment in engineering and a higher degree of operational maturity. For that reason, most organizations will not adopt them early in their AI journey. They become relevant when AI capabilities grow and infrastructure becomes a strategic asset rather than a commodity.

Enterprises evaluating this category should approach it with a long-term perspective. It can be an opportunity for differentiation, but only when aligned with clear operational requirements and business goals.

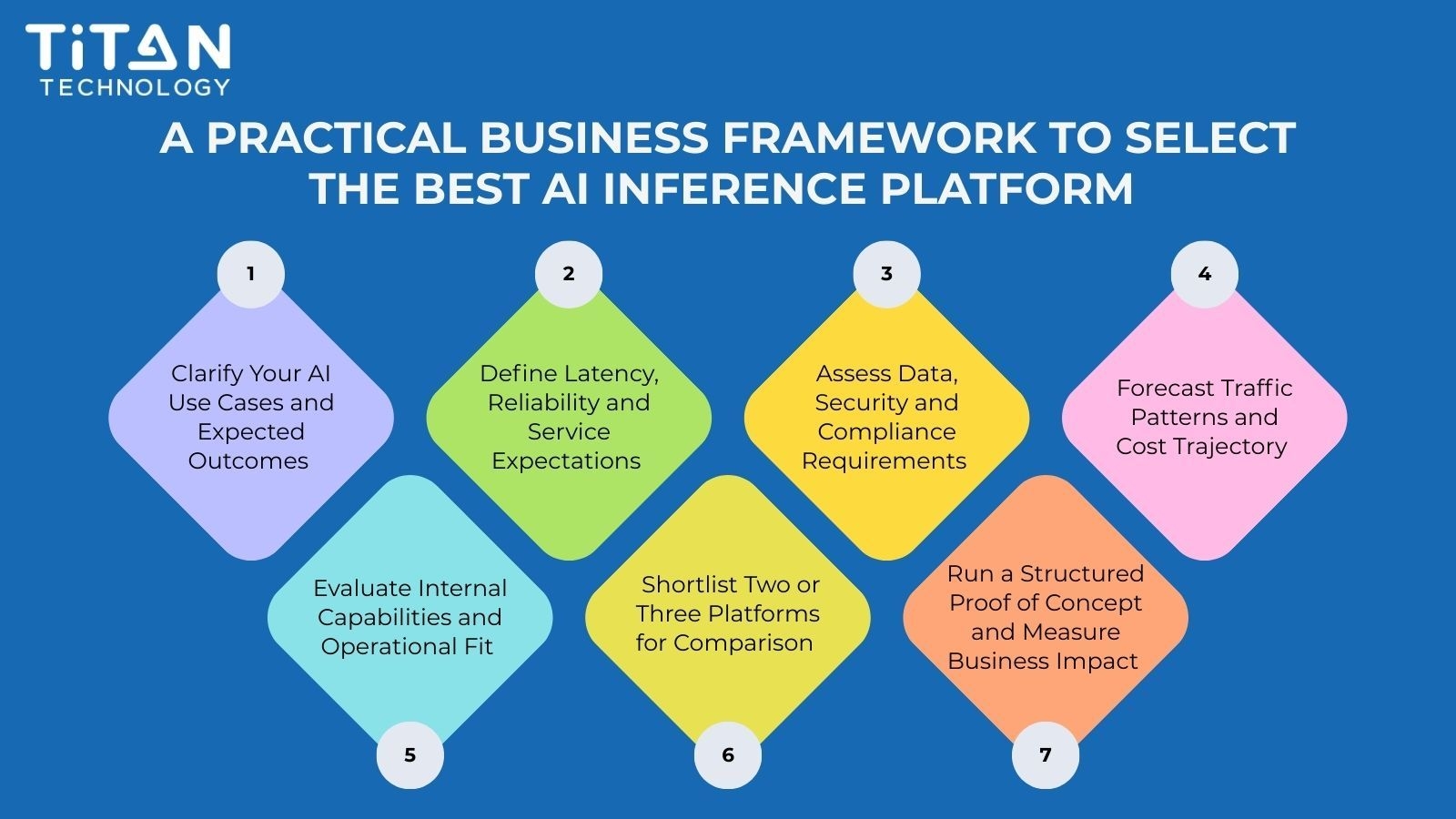

A Practical Business Framework to Select the Best AI Inference Platform

Choosing the right AI inference platform is not a technical exercise. It is a business decision that affects cost structure, customer satisfaction, operational resilience and long-term innovation. With numerous providers and pricing models available, leaders require a structured approach to evaluate options without getting bogged down in technical details.

The following framework provides a practical approach for organizations to assess their needs and identify a platform that aligns with both their short-term objectives and long-term strategy.

1. Clarify Your AI Use Cases and Expected Outcomes

Before comparing platforms, organizations must determine what they need AI to achieve. Use cases such as chat assistants, recommendation engines, document automation or real-time analytics place different demands on the inference layer. Clear use case definitions help narrow the field and prevent overinvestment in capabilities that are unnecessary for business goals.

2. Define Latency, Reliability and Service Expectations

AI features often sit close to customer interactions or critical internal workflows. This means performance matters. Leaders should define acceptable response times, uptime expectations and the degree of performance consistency required. These criteria make it easier to assess providers based on real operational needs rather than theoretical benchmarks.

3. Assess Data, Security and Compliance Requirements

Organizations handling sensitive or regulated data need platforms that support their governance obligations. Leaders should map compliance requirements early and use them as a filter rather than an afterthought. This step eliminates platforms that cannot meet the required security standards and reduces risk during implementation.

4. Forecast Traffic Patterns and Cost Trajectory

AI usage rarely grows linearly. Many teams experience rapid increases once AI features are adopted across the business. Leaders should forecast potential usage scenarios and evaluate pricing models based on expected growth. This helps identify whether per-token, per-request or compute-based pricing will remain sustainable as adoption expands.

5. Evaluate Internal Capabilities and Operational Fit

Some platforms are simple to adopt but less flexible. Others offer deep customization but require stronger engineering support. The right choice depends on the organization’s talent, structure and ability to manage ongoing operations. Leaders should realistically assess internal capacity before choosing a platform that may be difficult to maintain over time.

6. Shortlist Two or Three Platforms for Comparison

Once the strategic filters are applied, most businesses find that only a handful of providers remain suitable. Evaluating a short list allows teams to focus on platforms that meet both functional and strategic criteria without overwhelming decision-makers.

7. Run a Structured Proof of Concept and Measure Business Impact

A well-designed proof of concept validates performance, cost and operational fit under real conditions. It should measure practical indicators such as latency consistency, cost per inference, integration time and expected scaling behavior. These insights empower leaders to move forward with confidence rather than relying on assumptions or vendor claims.

How Titan Supports Businesses in Selecting and Deploying AI Inference Platforms

Selecting an AI inference platform is a strategic decision that affects every stage of an organization’s AI journey. It shapes the cost structure, performance, scalability and long-term viability of AI investments. For many businesses, navigating the growing landscape of providers, pricing models and compliance requirements can be overwhelming without the right technical and strategic guidance.

This is where our team plays a meaningful role. We support enterprises by combining technical expertise with a business-first approach, ensuring that AI adoption aligns with organizational goals and delivers measurable value.

1. Strategic Assessment of AI Readiness and Platform Requirements

We begin by helping organizations understand their current capabilities and long-term objectives. This includes evaluating existing systems, identifying potential use cases and clarifying the performance, governance and integration requirements that the inference platform must support. The result is a clear roadmap that reduces uncertainty during the platform selection process.

2. Comparison and Evaluation of AI Inference Platforms

Our team analyzes the strengths and limitations of cloud providers, foundation model labs and specialist platforms through the lens of your business needs. By mapping each option to use cases, cost projections and operational expectations, we help leaders narrow their choices to the platforms that offer the best fit for both immediate priorities and future growth.

3. Architecture Design and Integration Support

Once a platform is selected, we design inference architectures that ensure reliable performance, predictable spending and seamless integration with existing applications. This includes considerations such as request routing, data handling, monitoring and scaling. Our goal is to create an environment where AI features operate smoothly and can expand without friction.

4. Implementation, Testing and Optimization

We guide organizations through the full implementation process, from initial configuration to production deployment. During this phase, we validate performance through benchmarking, load testing and real-world usage scenarios. We also help optimize inference workloads to control cost while maintaining the level of performance needed for customer and employee interactions.

5. Long-Term Support and Continuous Improvement

AI systems evolve and so do the platforms that power them. We provide ongoing support to monitor performance, refine cost efficiency and adapt architectures to new use cases as the business expands. This ensures that AI remains a scalable and sustainable capability rather than a one-time initiative.

Across these stages, our objective is consistent. We help organizations adopt AI in a responsible, cost-effective, and long-term success-oriented manner. For a closer look at our AI, cloud and software development capabilities, you can explore our services page.

Conclusion

Selecting an AI inference platform is more than a technical choice. It is a strategic decision that influences how efficiently an organization can scale AI, manage costs and deliver consistent value across its operations. Because every business has different requirements and levels of readiness, there is no single platform that fits all scenarios. The right choice depends on clear priorities and a long-term view of how AI will support the organization.

A structured evaluation process enables leaders to navigate a complex landscape with confidence, ensuring that AI investments yield meaningful outcomes. Our team supports organizations through this process by assessing their needs, comparing platform options and designing architectures that balance performance, cost and governance.

If your business is exploring how to operationalize AI or planning for the next stage of deployment, we are ready to assist. You can reach out to us through our contact page to begin the conversation.

A thoughtful platform choice creates the foundation for scalable and resilient AI capabilities. With the right guidance, your organization can unlock solutions that grow in line with your ambitions and deliver a lasting impact.