Summary

AI is scaling faster than enterprise oversight, creating new risks in 2026.

AI Governance Platforms provide the guardrails needed for safe, transparent, and compliant AI deployment.

Strong governance reduces bias, model drift, and regulatory exposure while improving decision quality.

High-risk sectors like healthcare, finance, retail, and public services require governance as a core operational layer.

With governance embedded into workflows, organizations scale AI confidently and protect long-term trust.

AI is becoming deeply embedded in how organizations make decisions, serve customers, and run operations. Yet as adoption accelerates, many leaders face a growing challenge: AI is scaling faster than their ability to control it. This gap is now one of the biggest risks for businesses entering 2026.

According to global adoption forecasts, AI users worldwide are expected to surpass 378 million in 2025, reflecting how quickly intelligent systems are moving from experimentation to core business infrastructure, as highlighted by Edge AI Vision.

The issue is no longer whether companies can deploy AI. The real issue is whether they can maintain AI that is safe, transparent, compliant, and aligned with organizational standards. Without proper guardrails, AI can produce biased decisions, expose sensitive information, misinterpret context, or trigger regulatory violations.

This is why AI governance platforms have become essential. They provide the structure, oversight, and accountability needed to manage how AI behaves across teams and systems. In 2026, they will no longer be optional. They are the foundation for scaling AI responsibly and protecting long-term business trust.

2026 — Why AI Governance Has Become the No.1 Enterprise Priority

AI has become deeply embedded in core business operations. It now plays a role in decisions that affect customers, financial outcomes, workforce planning, risk controls, and even public services. As AI assumes greater responsibility, the impact of errors or unchecked model behavior becomes significantly more severe. This is the fundamental reason governance has moved to the top of the executive agenda in 2026.

The speed of adoption has also outpaced internal oversight. Most organizations now operate multiple AI models across different departments, but the controls around these systems often remain fragmented. When marketing, operations, finance, and customer service each deploy AI independently, it becomes difficult to maintain consistency, accountability, and visibility across the organization. This “governance gap” increases the likelihood of bias, drift, or decisions that conflict with regulatory requirements or ethical standards.

Regulation is intensifying at the same time. Frameworks such as the EU AI Act require organizations to demonstrate fairness, transparency, and traceability for high-risk AI systems. Compliance expectations are rising globally, and non-compliance now carries significant financial penalties and reputational risk. For industries such as healthcare, finance, and the public sector, governance has evolved from a recommended practice to a legal and operational necessity.

Customer expectations are also shaping this shift. People are increasingly seeking assurance that AI-driven decisions are fair, secure, and transparently explainable. Enterprises that cannot clearly articulate how their AI works risk losing trust, market credibility, and long-term loyalty.

In 2026, the conversation about AI has undergone a fundamental shift. The question is no longer whether organizations should adopt AI; the question is how. The question is whether they can control it. This is why AI governance platforms have become essential. They help organizations strengthen oversight, align AI behavior with business and regulatory expectations, and ensure every model deployed across the enterprise operates safely and consistently at scale.

What AI Governance Really Means in 2026 — A Business-Centric Definition

AI governance is no longer a set of policies or compliance checklists. In 2026, it represents a structured operating approach that guides how AI systems behave, how decisions are monitored, and how risks are managed across the organization. This shift stems from a fundamental realization: AI does not merely automate processes; it actively shapes outcomes that impact customers, financial integrity, and organizational trust.

A business-centric view of AI governance focuses on four core pillars that ensure AI remains safe, predictable, and aligned with enterprise standards.

1. Risk and Safety Management

This involves continuously observing how AI models behave, identifying anomalies early, and preventing harmful results. Effective oversight helps organizations detect model drift, shifts in data quality, or logic deviations that could compromise decision-making.

2. Transparency and Explainability

Leaders, regulators, and end users increasingly expect clarity about how AI produces its outputs. Explainability ensures decisions can be understood, validated, and trusted, especially in high-impact domains such as healthcare, compliance, and credit assessment.

3. Compliance and Accountability

Enterprises must ensure their AI systems meet tightening regulatory requirements and ethical expectations. Governance establishes ownership for each AI system and clarifies who is responsible for monitoring decisions, documenting processes, and addressing risks. Studies such as McKinsey’s future of work analysis emphasize that organizations adopting automation and AI at scale must develop stronger oversight structures to manage responsibilities and ensure safe integration across the workforce.

4. Lifecycle Governance and Monitoring

AI models evolve as data and context change. Governance ensures that teams maintain continuous oversight from the moment a model is trained to the point it is deployed, updated, or retired. This helps organizations maintain stable performance and make decisions that align with business objectives over time.

In 2026, effective AI governance is not about slowing innovation. It is about providing the clarity, accountability, and structure organizations need to scale AI responsibly. AI governance platforms bring these elements together, giving enterprises a unified foundation to manage AI behavior reliably as adoption accelerates.

The Governance Challenges Enterprises Must Solve in 2026

As AI expands into more critical areas of the business, organizations are confronting new risks that traditional IT controls cannot manage. These challenges do not stem from AI being ineffective. They occur because the systems surrounding AI, including oversight, accountability, monitoring, and data discipline, have not kept pace with the rapid development of AI. In 2026, enterprises must resolve several high-impact governance gaps.

1. Unpredictable Model Behavior and Drift

AI models continually evolve as new data is fed into them. Without proper oversight, accuracy can degrade, decision patterns can shift unpredictably, and subtle errors may go undetected. This is especially dangerous in domains where consistency is essential, such as underwriting, fraud detection, clinical evaluations, and demand forecasting.

2. Lack of Data Lineage and Visibility

Many organizations still operate without a clear view of where training data originates, how it is transformed, and how it influences model outputs. When data lineage is unclear, teams cannot reliably audit decisions or prove compliance. This also makes it harder to diagnose root-cause issues when AI systems behave unexpectedly.

3. Rising Regulatory Pressure and Compliance Complexity

Expectations for transparency and fairness are becoming increasingly stringent, particularly for high-risk applications such as healthcare diagnostics, financial scoring, and public-sector decision-making systems. Guidance from industry research indicates that healthcare AI, for instance, must adhere to rigorous validation and monitoring processes to prevent bias and ensure safe deployment, as highlighted in Uptech's analysis on AI governance in healthcare. Similar expectations are emerging across finance, insurance, government services, and regulated industries worldwide.

4. Fragmented AI Adoption Across Business Units

Most enterprises deploy AI in silos. Marketing teams adopt generative models, operations rely on forecasting engines, and finance implements risk simulations. When each department chooses different tools and workflows, organizations lose the ability to enforce consistent policies, track decisions, or maintain unified accountability across all AI systems.

5. Workforce Preparedness and Oversight Skills

AI governance necessitates new capabilities, including the ability to interpret model behavior, question outputs, detect anomalies, and make informed decisions about when to intervene. Many teams have not yet developed these skills at scale. Without proper expertise, even well-designed AI systems can be misinterpreted or misused, thereby increasing operational and ethical risks.

6. Slow or Inefficient Incident Response

When AI systems fail, enterprises often lack standard response protocols. Teams may struggle to identify the problematic model version, trace the data that influenced the output, or roll back the system in a timely manner. This leads to prolonged disruptions and undercuts confidence in AI-driven processes.

These challenges highlight a central truth: enterprises do not simply need better AI models. They need AI governance platforms that provide structure, visibility, and control. Without these foundations, AI becomes difficult to scale safely and nearly impossible to audit.

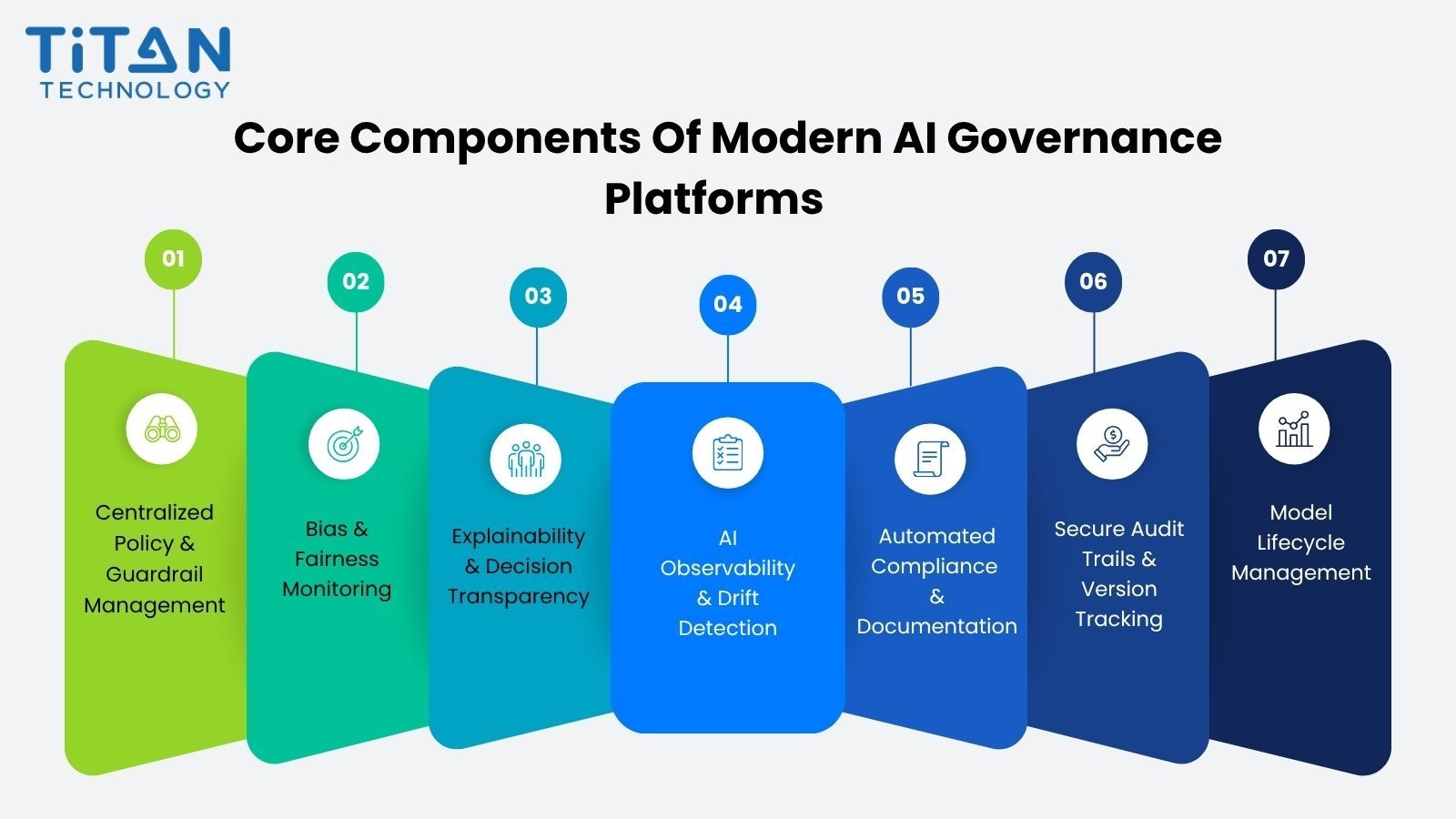

Core Components of Modern AI Governance Platforms

Modern enterprises require more than policies and guidelines to effectively manage AI at scale. They need structured systems that bring consistency, visibility, and accountability to every model operating across the organization. This is where AI governance platforms create real value. Rather than functioning as a single tool, these platforms combine multiple capabilities that work together to ensure AI remains safe, compliant, and reliable.

1. Centralized Policy and Guardrail Management

Enterprises require a unified place to define how AI systems should behave. Policy engines allow organizations to set standards for acceptable model behavior, data usage, ethical requirements, and approval workflows. This ensures that decisions are governed consistently across departments, regardless of the number of tools or models deployed.

2. Bias and Fairness Monitoring

Bias can enter AI systems through data or model design. As AI adoption grows in sensitive sectors such as healthcare, where the market for AI-enabled medical technologies is projected to expand significantly in the coming years, the need for continuous fairness evaluation becomes even more critical. Insights from Statista’s AI medical device market analysis demonstrate how quickly AI is being integrated into regulated environments, making bias monitoring essential for compliance and patient safety.

3. Explainability and Decision Transparency

AI-driven decisions must be understandable. Explainability tools help teams see which factors influenced an output and why a model reached a particular conclusion. This is vital for industries that require auditability, such as finance and insurance, and supports internal teams when communicating decisions to regulators or customers.

4. AI Observability and Drift Detection

AI models evolve over time. Platforms need to track performance shifts, detect anomalies, and alert teams when a model behaves differently from what is expected. Observability ensures that data changes, environment shifts, or incomplete inputs do not compromise decision quality.

5. Automated Compliance and Documentation

Regulatory requirements are expanding worldwide. Automated compliance modules help organizations map models to risk categories, generate required documentation, and maintain audit-ready records. This reduces the manual burden on legal and risk teams, ensuring that processes remain consistent across the enterprise.

6. Secure Audit Trails and Version Tracking

Every AI decision should be traceable. Governance platforms maintain detailed records of how models were trained, which datasets were used, when updates occurred, and how outputs have changed over time. This transparency is essential for resolving incidents quickly and demonstrating accountability.

7. Model Lifecycle Management

AI lifecycle governance covers the entire journey from data preparation and model development to deployment, monitoring, and retirement. Effective lifecycle controls ensure that AI systems remain aligned with business objectives and regulatory expectations as they scale.

These components form the foundation of AI governance platforms in 2026. They enable enterprises to move beyond reactive oversight and establish a proactive, end-to-end governance structure that grows with their AI ambitions.

Industry Use Cases Where Governance Is Non-Negotiable

AI is expanding into domains where decisions have a direct impact on human well-being, financial stability, and public trust. In these environments, governance is not a strategic advantage but an operational obligation. As AI becomes more deeply embedded in sector-specific workflows, oversight becomes essential to ensure fairness, transparency, and safety.

Healthcare — The Most Regulated AI Domain

Healthcare is experiencing one of the fastest adoption curves of AI. Hospitals and medical technology companies increasingly rely on AI for diagnostics, imaging interpretation, triage support, and treatment recommendations. The AI medical device market alone reached 6.1 billion USD in 2024 and is projected to exceed 52 billion USD by 2031, growing at a rate of more than 24 percent annually, according to Statista.

As AI begins to influence clinical decisions at this scale, governance becomes mandatory. Healthcare organizations must continuously validate models, ensure that training data is unbiased, monitor for drift, and maintain transparent auditability for regulators and clinicians. Given the sector’s regulatory sensitivity, even small inconsistencies can impact patient outcomes, making strong governance a core requirement for safe deployment.

Finance & Fintech

Financial institutions depend on AI for credit decisions, fraud detection, portfolio analysis, and risk modelling. The sensitivity of these use cases means that even minor inaccuracies can result in discriminatory outcomes, regulatory penalties, or financial losses. Governance frameworks help financial organizations maintain explainability, ensure compliance across jurisdictions, and document model decisions at a level suitable for audits and regulatory reviews. As AI-driven automation grows within mission-critical financial processes, governance serves as the safeguard against systemic risk.

Retail & E-Commerce

AI powers the personalization engines, pricing models, and forecasting systems used throughout modern retail. While these technologies enhance the customer experience, they also carry risks related to privacy, fairness, and unintended algorithmic bias. Strong governance ensures that AI-driven interactions remain transparent, customer-centric, and aligned with ethical expectations. It also protects organizations against brand damage that may result from inappropriate personalization or unfair pricing logic.

Public Sector & Smart City Systems

Government agencies and smart-city operators deploy AI for verification, resource allocation, mobility intelligence, and public safety applications. These systems must meet extremely high standards of fairness and accountability, as their decisions significantly impact citizens’ rights and access to public services. Effective governance ensures that AI-driven public systems remain transparent, interpretable, and aligned with policy obligations. It also provides the comprehensive audit trails needed to maintain public trust.

The Business Impact: Why Strong Governance Drives Enterprise Value

AI governance is not just a compliance requirement. It is a performance multiplier that shapes how effectively organizations scale AI. When model behavior is predictable and transparent, businesses reduce the risk of operational failures, biased decisions, and regulatory exposure. This stability protects both customer trust and brand reputation.

Governance also improves execution efficiency. With clear oversight, documentation, and monitoring integrated into the AI lifecycle, teams avoid repeated troubleshooting and can focus on refining models. As a result, deployment cycles become faster and AI initiatives progress without unnecessary friction.

From a strategic perspective, governance enhances decision quality. Leaders can rely on outputs that are consistent, explainable, and defensible. This strengthens forecasting, customer decisioning, and operational planning because AI insights can be trusted.

Most importantly, organizations with strong governance achieve higher long-term ROI. AI becomes sustainable, scalable, and aligned with business objectives rather than existing as isolated experiments. This allows enterprises to confidently expand AI use cases while maintaining full control and accountability.

Common Pitfalls When Implementing AI Governance (and How to Avoid Them)

Many organizations understand why AI governance matters but still struggle to implement it effectively. These challenges often arise not from a lack of commitment, but from structural missteps that weaken oversight and slow adoption. In 2026, the following pitfalls are the most common when enterprises begin establishing governance programs.

1. Treating Governance as a Secondary Initiative

Some organizations approach governance as an optional add-on owned by a single team. Without executive sponsorship, clear accountability, or dedicated resources, governance becomes fragmented and fails to keep up with the pace of AI deployment.

How to avoid it: Position governance as an enterprise-wide program with defined ownership, budget allocation, and ongoing cross-functional coordination.

2. Creating Policies That Are Too Complex to Apply

Policies often fail because they are overly academic or disconnected from operational reality. When teams cannot interpret or apply the rules in their daily workflow, governance exists on paper but not in practice.

How to avoid it: Develop concise, actionable guidelines with real examples and integrate them directly into existing tools and processes.

3. Allowing Each Department to Build Governance in Isolation

When marketing, finance, operations, and data science create their own standards, organizations end up with inconsistent practices. AI systems follow different rules, and leaders lose visibility into how decisions are being made across departments.

How to avoid it: Establish a unified governance framework and apply it consistently across all teams, supported by centralized oversight.

4. Overlooking Data Governance as the Foundation

Many AI failures originate from poor data quality, unclear lineage, or uncontrolled data access. Without strong data governance, organizations cannot explain model behavior or identify the root cause of unexpected outputs.

How to avoid it: Strengthen data governance early with quality checks, classification standards, clear lineage tracking, and well-defined access controls.

5. Lacking a Standardized Response When AI Malfunctions

When an AI system behaves unexpectedly, many enterprises do not have a defined response process. This slows investigation, extends incidents, and increases the impact on operations and customers.

How to avoid it: Create an AI incident response playbook outlining responsibilities, diagnostic steps, rollback procedures, and communication protocols.

These pitfalls highlight a broader truth. Effective governance requires more than policies and intentions. Organizations need practical operational structures that guide how AI is developed, monitored, and improved. AI governance platforms provide the consistent foundation needed to embed governance into workflows and maintain control as AI adoption scales.

A Practical Framework for Choosing an AI Governance Platform (2026)

Choosing the right AI governance platform requires more than comparing feature lists. Enterprises need a structured evaluation approach that aligns the platform with organizational risk levels, regulatory expectations, and long-term AI maturity. The following framework reflects global recommendations, including guidance from the OECD’s AI Principles, which emphasize safety, transparency, accountability, and human oversight as the foundation for responsible AI.

1. Start with an AI Inventory and Risk Classification

Organizations should begin by mapping all AI systems currently in use. This includes identifying the purpose of each model, the data it relies on, and the decisions it influences. Classifying each system by risk level helps determine the required level of governance. High-impact systems, such as financial scoring or clinical decision support, demand stronger controls than low-risk automation tools.

2. Assess Organizational Maturity and Governance Readiness

Before selecting a platform, enterprises must understand their current governance capabilities. This includes evaluating processes for model documentation, lineage tracking, monitoring, and compliance. Organizations with low maturity may require platforms that offer more guided workflows, while mature teams may prioritize configurability and advanced monitoring.

3. Define Core Requirements Based on Use Cases

Different industries face different governance needs. Financial institutions require explainability and auditability. Healthcare organizations require continuous validation and strict data protection. Public-sector agencies require transparency and fairness. A governance platform should align with these sector-specific demands rather than forcing a one-size-fits-all approach.

4. Evaluate Integration Capabilities

A governance platform must integrate seamlessly with existing data pipelines, MLOps workflows, model registries, and deployment environments. Without strong integration, governance becomes another silo, and the platform cannot deliver its intended oversight. Organizations should prioritize platforms that provide flexible APIs and support for cloud, on-premises, and hybrid environments.

5. Review Vendor Expertise and Long-Term Support

Governance is not a one-time implementation. It requires ongoing updates as regulations evolve, and new AI models are introduced. Enterprises should evaluate whether vendors have demonstrated experience in responsible AI, sector-specific compliance, and continuous innovation. Vendors who actively contribute to global AI governance standards are typically better positioned for long-term partnership.

6. Pilot with Clear KPIs Before Scaling

A pilot program helps validate whether the platform meets operational expectations. Teams should define measurable KPIs such as model monitoring accuracy, reduction in governance overhead, time saved during audits, or improvement in explainability. A successful pilot ensures that the platform can scale across the organization with confidence.

How Titan Helps Organizations Build Responsible, Scalable AI

Scaling AI safely requires more than strong models. It requires a governance foundation that ensures every system is transparent, reliable, and aligned with business expectations. Titan Technology brings this structure to organizations from the very beginning.

We embed governance directly into AI workflows, from data preparation to deployment. This approach transforms oversight into a built-in capability rather than a separate checklist. Models become fully traceable, decisions become explainable, and risks are identified before they escalate.

Our teams combine engineering expertise with governance-first design. We help organizations establish clear standards for model behavior, automate validation and monitoring, and create audit-ready documentation that supports regulatory requirements without hindering innovation.

The result is an AI ecosystem that scales with confidence. Titan enables enterprises to move beyond isolated AI initiatives and build a unified, well-governed environment where innovation can grow predictably and responsibly.

Conclusion

AI is now central to how organizations innovate, compete, and deliver value. But without strong governance, even the most advanced models can introduce risk, inconsistency, or compliance failures. In 2026, the organizations that succeed with AI are those that treat governance as a strategic capability, not an afterthought. With the right oversight structure, AI becomes more transparent, more reliable, and more aligned with long-term business goals.

AI governance platforms provide this foundation. They enable enterprises to scale AI with clarity, control, and confidence, ensuring every system operates responsibly as adoption accelerates. For leaders planning the next stage of their AI journey, governance is not just protection. It is the catalyst that turns experimentation into sustainable, enterprise-wide impact.

If you’re ready to build a responsible and scalable AI ecosystem, contact us to explore how our governance-first approach can support your transformation.